Meaningful Assessment at Key Stage 3 :: CET

Assessment information has been used to drive improvement through the analysis of data to identify which people have required key intervention, and what specific interventions they required. Schools have often used the process of question level analysis to guide this. This process has often begun too late, mainly due to the need for a large enough sample size to make robust judgements about question level data.

This case study focuses on how by working collaboratively across Creative Education Trust, trust and school leaders and teachers have been able to generate meaningful question level data for National Curriculum for year 7 upwards, to allow early and effective curriculum intervention.

Background

At Creative Education Trust, we need internal assessment data to tell us something useful and ensure that we can analyse pupils’ ability to demonstrate their knowledge and skills. Data from internal “Trust” assessment can be useful in determining the progress of an individual pupil, but it has greatest impact when turned into information that displays a clear focus on how it can assist the development of teachers and the delivery of the curriculum across an entire school or group of schools.

We need teachers to be informed reliably and accurately about what each pupil, and group of pupils, knows and what they don’t know, as a result of their teaching. We also need to know the impact that the curriculum, both in terms of its sequencing and resourcing, has had on pupils ability to apply knowledge.

To ensure that the above is possible, an aligned curriculum needed to be in place, with aligned assessments produced that sit alongside this.

Implementation

From 2020, across Creative Education trust, Middle Leaders have co-authored and collaboratively designed a secondary school curriculum in all KS3 National curriculum subjects, applying shared values and an agreed understanding of the ambitious ‘powerful knowledge’ that all students need to know.

Alongside this, there is an aligned model of reviewing pupil performance on assessments, which is also co-authored by subject specialists. The aim of these assessments is to ensure that we systematically test students’ understanding across a large sample size, identify gaps in their knowledge, share best practice where it exists and develop new ways of delivering concepts where we know there are gaps in performance.

To ensure that are our assessments are purposeful, subject leaders from across Creative Education trust embarked on a process of training with Cambridge Assessment. Alongside this, leaders across the trust developed a process of quality assurance based on a framework created by Evidence Based Education (2018), “The Four Pillars of Assessment”. Within this resource, they propose that for assessment to be useful, it must have the following properties:

Purpose

Have a clear aim for what the assessment is trying to achieve.

Validity

Provide information which is both valuable and appropriate for the intended purpose.

Reliability

Ensure judgements made are accurate and consistent.

Value

Be worth the time and effort put into designing the assessment, in relation to its initial purpose.

From this summary, it can be suggested that clarity over the ‘purpose’ aspect of internal assessment is crucial in determining the usefulness of the information we generate. However, we are fully aware that formative assessment has many purposes, including helping to discuss with pupils their potential destinations, preparing them for external examinations, anticipating the results at school level of externally imposed accountability measures and informing curriculum planning.

These purposes may feel like they are antagonistic; however, through careful thought and design, they can be tied together to refine internal assessment.

Impact

The impact of this work has been clear in numerous areas:

- Reliable curriculum-level data from assessments

- Early curriculum intervention

- Informed curriculum review

- Granular subject-specific support

With over 2000 students sitting a Year 7 Geography assessment across Creative Education Trust, the data from the assessment can be classed as reliable, and inferences from the data are significantly more valid then would have been previously. Analysis of the question level data also allows the quality of question items to be reviewed, so if there was an access issue which a question, or if it was too easy or too hard, this information could be passed down to schools to inform their curriculum conversations post assessments.

This reliable curriculum level data has allowed data aggregation to take place. Through using tools such as Smartgrade, Power-Bi and excel, data has been shared so that each school has benchmark against a MAT average by question and curriculum topic area (e.g. Natural Hazards). This allows schools to identify areas of strength and improvement in pupil, class and school level data, and MAT leaders to do the same across all schools.

The use of Smartgrade specifically has not only improved conversations around topic level assessed data from assessment, but it has also allowed the production of a standardised grade for students sitting KS3 assessment. Through this process of standardisation, school leaders have become more aware of how, on average, pupils in their setting, are performing against a MAT average in KS3 assessments.

Through the identification of misconceptions and gaps in pupil knowledge in KS3, early intervention can take place, before these gaps and misconceptions multiply and morph, affecting future learning. Leaders at school level have been able to put in place reteach lessons, or create resources, focus on specific and targeted areas of the curriculum.

Longer term, the curriculum review process has also been impacted positively though the redesigned KS3 assessment, and the data generated from it. By sharing data or curriculum gaps, leaders have been able to review local curriculum sequencing and resourcing, with more targeted and specific questions. They are also able to ask granular requests for support. For example, rather than sample, “Do we know a school that has high quality resource on Map Skills?” they can ask the question “Do we know a school that has high quality resource on map skills, specifically 6-figure grid references?”. While they answer to this might not be the school that has performed highest on the assessment in that area, the data generated provides a clearer indication of where the answer may be.

More meaningful KS3 question and topic level data has also led to better CPD and support for teachers. Where trends exist over time of underperformance by pupils on specific topics, the analysis of this data allows for the identification of specific causes, and therefore more bespoke support. For example, where previously, a limited sample of assessments at a school level of 200 pupils in Year 7 in a history assessment, may have had a lower performance on analysing judgements, a MAT-wide year 7 assessment, which topic level data aggregated may show there is a larger issue across the whole trust on the evaluation of sources for a specific time period. This has then led to subject specific CPD being offered to support teachers and leaders.

Next Steps

While great strides have been made in the aggregation of KS3 assessment data, how it is combined with data from other areas, such as subject knowledge audits, and KS4 performance outcomes remains an area of work. Through combining these areas, a laser-sharp focus on the CPD requirements at MAT, school and teacher level can be diagnosed, with support swiftly provided.

Conclusion

Assessment data, and the inferences made from it, is only as good as the reliability of the assessments from which the data is generated, and the sample size of the pupils sitting the assessment. If time and effort is to be put into improving the reliability of the assessment, through effective assessment CPD, and the sample size that sits, through curriculum alignment, then the data produces will be of such a high quality and granular nature that it allows tangible improvements in pupil outcomes.

Nimish Lad

Creative Education Trust

Read more case studies

View all

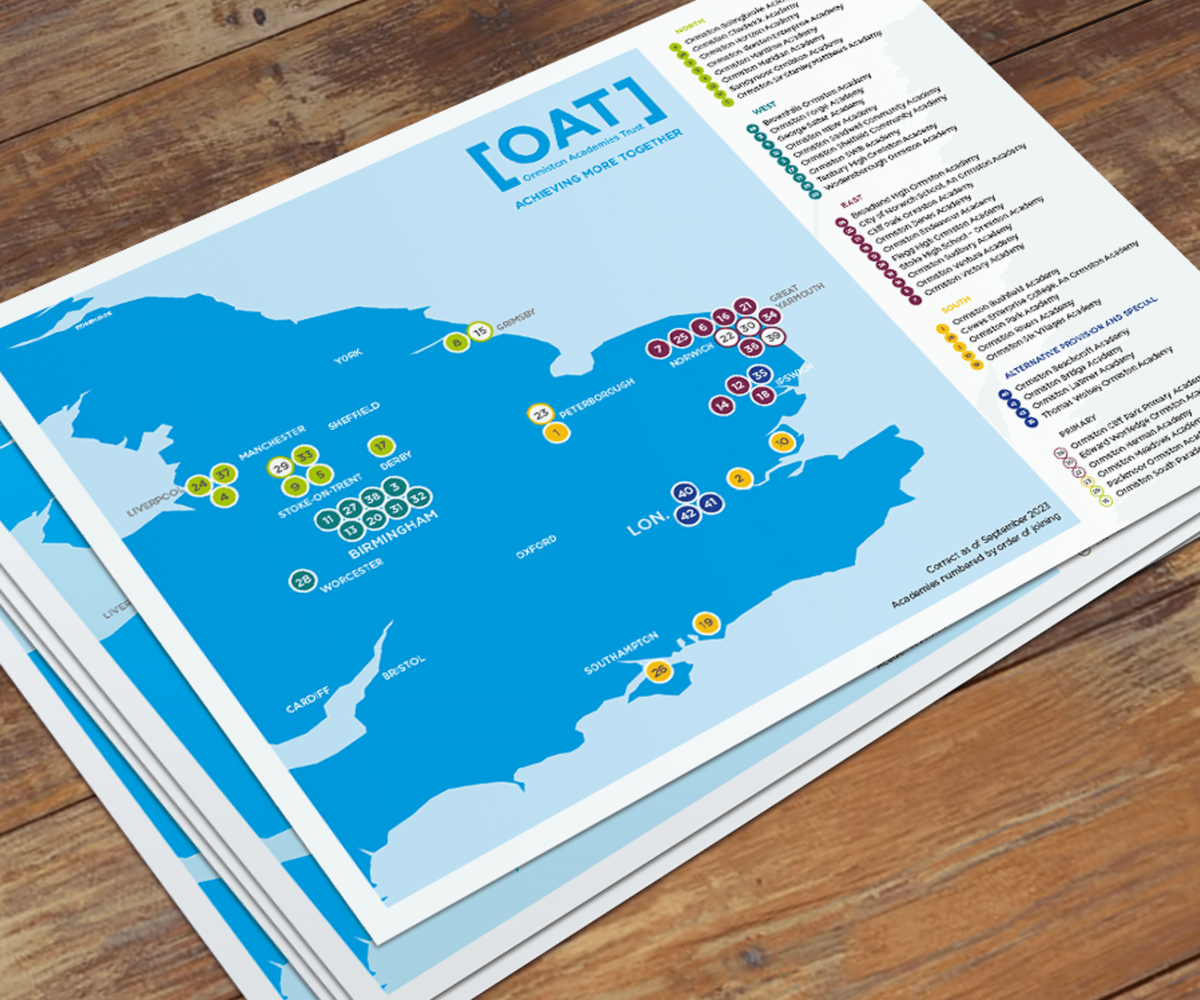

Strategic Progress Reporting & MAT Census :: OAT

Implementing MAT Dashboards with Google Tools :: FCAT